Welcome to Ranak’s Homepage!

I’m a full-time Applied Scientist II (Level 5) with Amazon Web Services (AWS), specializing in Forecasting for Supply Chain Optimization. I develop accurate, robust, and scalable forecasting models for Demand and Supply Planning, as part of the AWS Supply Chain group.

Prior to this, I was a Ph.D. Student at the University of California, San Diego, where I built efficient sensory learning systems for Wearable Sensing and Human Motion Recognition. I am grateful to Qualcomm and Halıcıoğlu Data Science Institute for their fellowship to support my research. I was an invited keynote speaker at KDD 2023.

During my Ph.D, I spent several wonderful summers as an Applied Scientist II Intern at AWS AI, working on LLM integration with Music, Speech, and Audio. I also spent a summer as a Data Science Intern at Bell Labs and a Software Development Engineer Intern at AWS Redshift.

Research Interests

I am broadly interested in building applications from time-series data, including:

- Supply Chain - Improved demand forecasting to support accurate resource planning, optimizing inventory management, exception detection, root cause analysis, and driving actionable recommendations in retail and manufacturing.

- Wearable Sensing & Healthcare - Leveraged kinematic data, enriched with physical and semantic context, to enhance action recognition and improve health assessment from physiological signals.

- Audio Analysis - Augmented accent-robustness of speech models and designed chatbots for audio and music interaction.

To learn more about my work, please look into my projects and relevant publications.

News

[March 2025] The inaugural workshop on AI for Supply Chain: Today and Future is accepted to KDD ‘25. (Held in conjunction with KDD 2025, Toronto, ON, Canada, Aug 3 - 4, 2025) [Proposal]

[March 2025] Downstream Task Guided Masking Learning in Masked Autoencoders Using Multi-Level Optimization has been accepted to TMLR.

[December 2024] ZeroHAR: Sensor Context Augments Zero-Shot Wearable Action Recognition has been accepted to AAAI ‘25.

[September 2024] UniMTS: Unified Pre-training for Motion Time Series has been accepted to NeurIPS ‘24 (First pre-trained model for human motion time series).

[September 2024] Started my first full-time job as an Applied Scientist II (Level 5) at AWS Supply Chain in Bellevue, WA.

[August 2024] Successfully defended my Ph.D. Thesis on Efficient Learning for Sensor Time Series in Label Scarce Applications.

[April 2024] First survey on Large Language Models for Time Series accepted to IJCAI ‘24.

[September 2023] Physics-Informed Data Denoising for Real-Life Sensing Systems has been accepted to SenSys ‘23.

[August 2023] Gave an invited talk on PrimeNet: Pre-Training for Irregular Multivariate Time Series at SIGKDD ‘23 Workshop on Machine Learning in Finance, Long Beach, CA, USA. [Slide]

[June 2023] Returned to Amazon Web Services (AWS) AI as an Applied Scientist II Intern at the Speech Science Group. Worked on MusicLLM.

[February 2023] Towards Diverse and Coherent Augmentation for Time-Series Forecasting has been accepted to ICASSP ‘23.

[November 2022] PrimeNet: Pre-Training for Irregular Multivariate Time Series has been accepted to AAAI ‘23.

[August 2022] Won the Qualcomm Innovation Fellowship 2022 for our proposal on Robust Machine Learning for Mobile Sensing.

[June 2022] Joined Amazon Web Services (AWS) AI as an Applied Scientist II Intern at the Speech Science Group. Worked on Accent Robust Speech Recognition.

Work Experience

Full-time Experience

Applied Scientist II Sep 2024 - Present

Forecasting for Supply Chain Optimization

Developed and launched a scalable Demand Forecasting toolkit for AWS Supply Chain, used by hundreds of B2B and B2C clients across Retail, CPG, and Manufacturing. Delivered significant accuracy gains by minimizing stock-outs, reducing excess inventory, and cutting manual forecasting workload for Demand Planners. Key contributions include:

- Designed a custom forecast evaluation metric that quantifies the business impact of forecast error by balancing overstock vs. stockout costs, providing clear monetary tradeoffs in terms of revenue, cash flow, and profitability.

- Engineered hierarchical forecasting across temporal, spatial, and product hierarchies using top-down, bottom-up and middle-out approaches, plus statistical and ML-based reconciliation, improving accuracy at all aggregation levels.

- Created a personalized validation and performance reporting framework tailored to each customer's business model — factoring in seasonality and vendor lead times — to reduce unnecessary retraining and model churn.

- Devised a neural split-peak attention method to handle sparse demand. It models peak demand using self-attention and non-peak demand using masked convolution, reducing post-peak bias and improving forecast accuracy.

- Enhanced model performance through multivariate feature integration, like holidays, price changes, and promotions.

Internship Experience

Applied Scientist II Jun 2023 - Sep 2023

MusicLLM: Music Understanding with LLMs

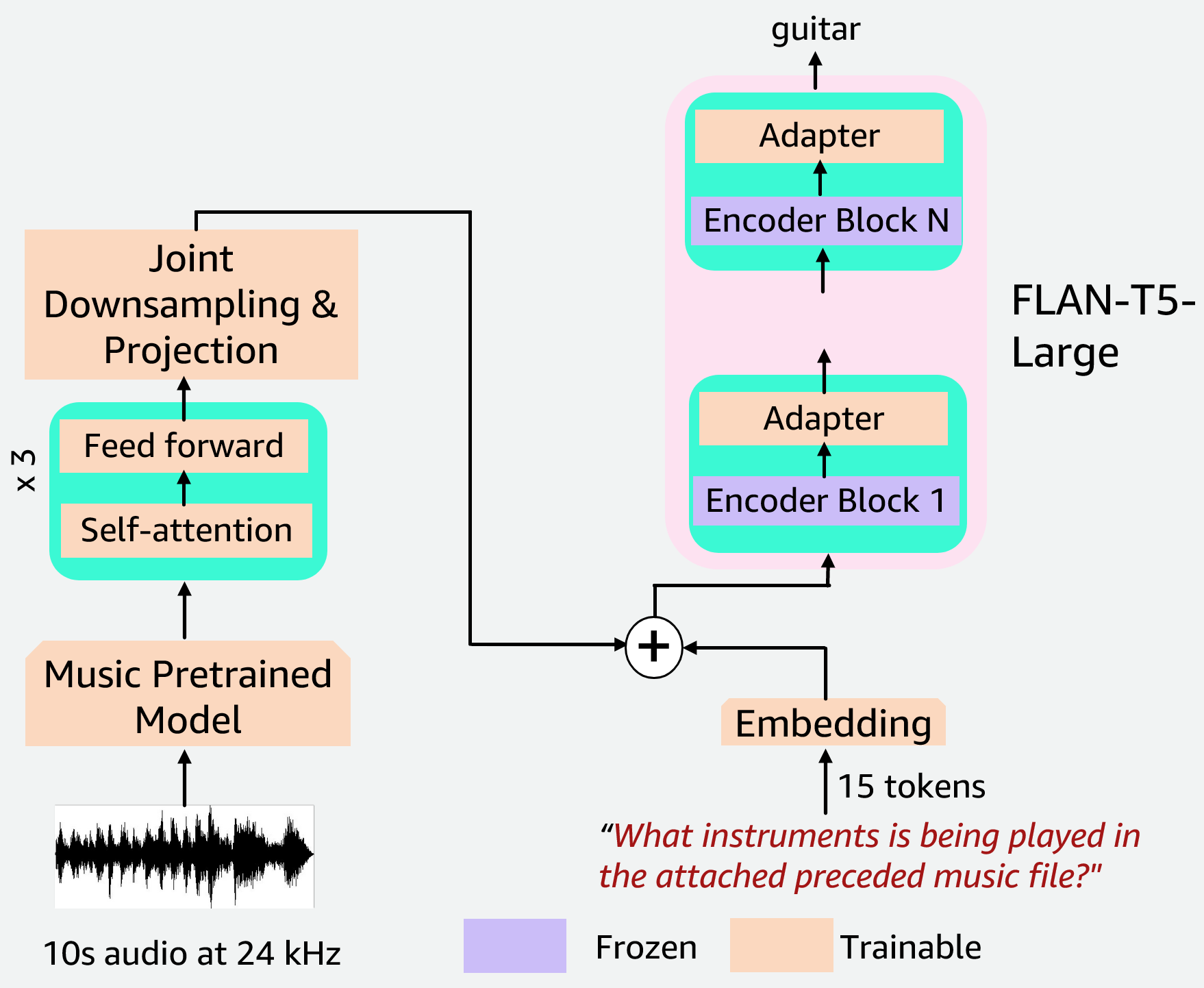

Built an LLM-enabled music understanding framework to solve music tasks, like captioning, retrieval, tagging, and classification in a text-to-text fashion. The model takes music file and task prompts as inputs and generate text responses. Used audio features from Encodec and an instruction-tuned FLAN-T5 LLM. [Slide]

Applied Scientist II Jun 2022 - Sep 2022

MARS: Multitask Pre-training for Accent Robust Speech Recognition

Built a pre-trained model for accent-robust speech representation that improves performance on several downstream tasks, like Speech Recognition by 20.4% and Speaker Verification by 6.3%, across 12 minority accents with few minutes of data. [Slide]

Data Scientist Jun 2021 - Aug 2021

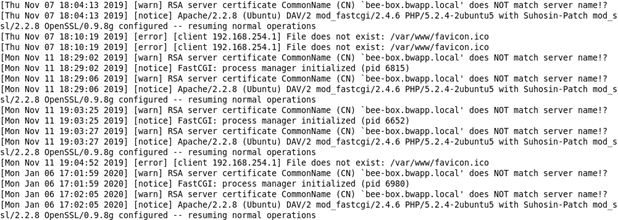

Automated Ticket Resolution from Semi-Structured Log Data

Developed an ML pipeline to automate ticket resolution by conducting data cleaning, preprocessing, and visualization on time-series semi-structured system-level log corpus, followed by statistical feature extraction and classification. [Slide]

Software Development Engineer Jun 2020 - Sep 2020

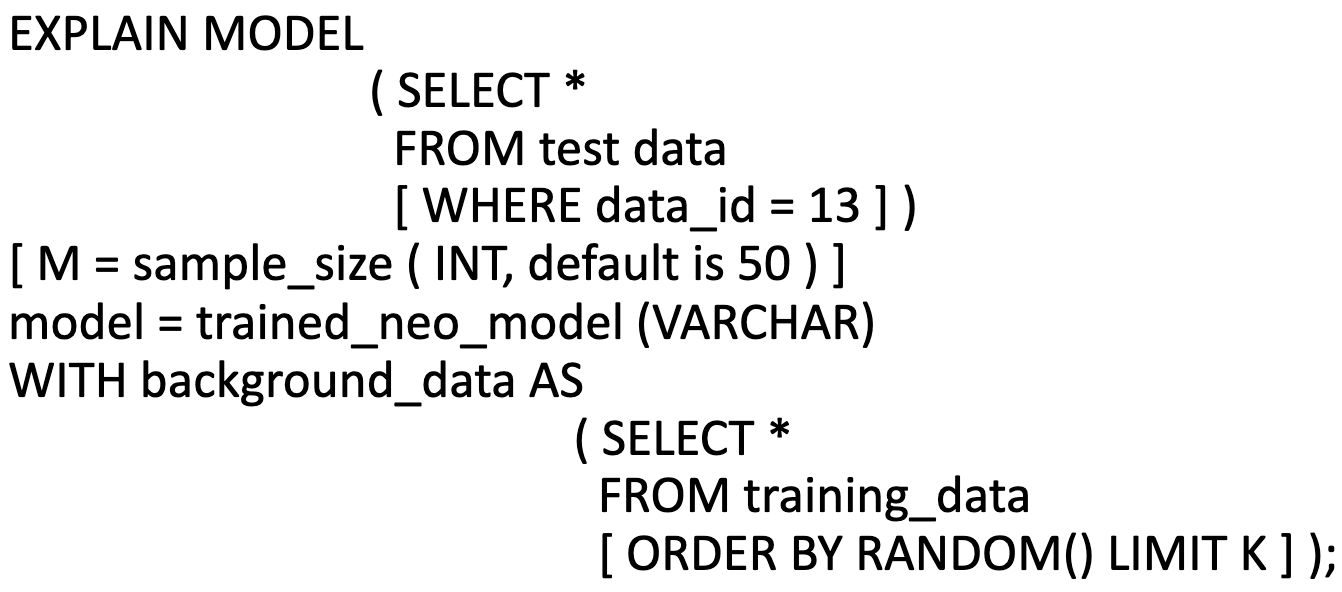

Model Explainability from SQL Surface for Redshift ML

Built a SHAP-based Explainability tool for AWS Redshift ML, enabling users to write SQL queries to introspect model predictions. Improved execution speed by 2x and reduced memory footprint by 90% over existing workflow in AWS Sagemaker.

Preview | Press Release | Blog | Documentation | Slide

Research Experience

Graduate Student Researcher Sep 2019 - Aug 2024

Data-Efficient and Robust Learning for Sensory Systems

Developed knowledge-driven learning methods leveraging physical and semantic context to enhance robustness and data efficiency in sensory systems. Applications spanned wearable sensing, motion tracking, and healthcare, resulting in 10+ peer-reviewed publications. Check out all the relevant publications.

Research Fellow Oct 2022 - Sep 2023

Context Aware Learning for IoT

Worked on two projects: 1) PILOT: Integrated physics-based constraints to improve data denoising for Inertail Navigation, CO2 Monitoring, and Air Handling in HVAC Control System [Paper | Slide], 2) ZeroHAR: Used sensor environment knowledge and fine-grained biomechanical information from LLMs to improve Zero-Shot Wearable Human Action Recognition [Paper | Slide].

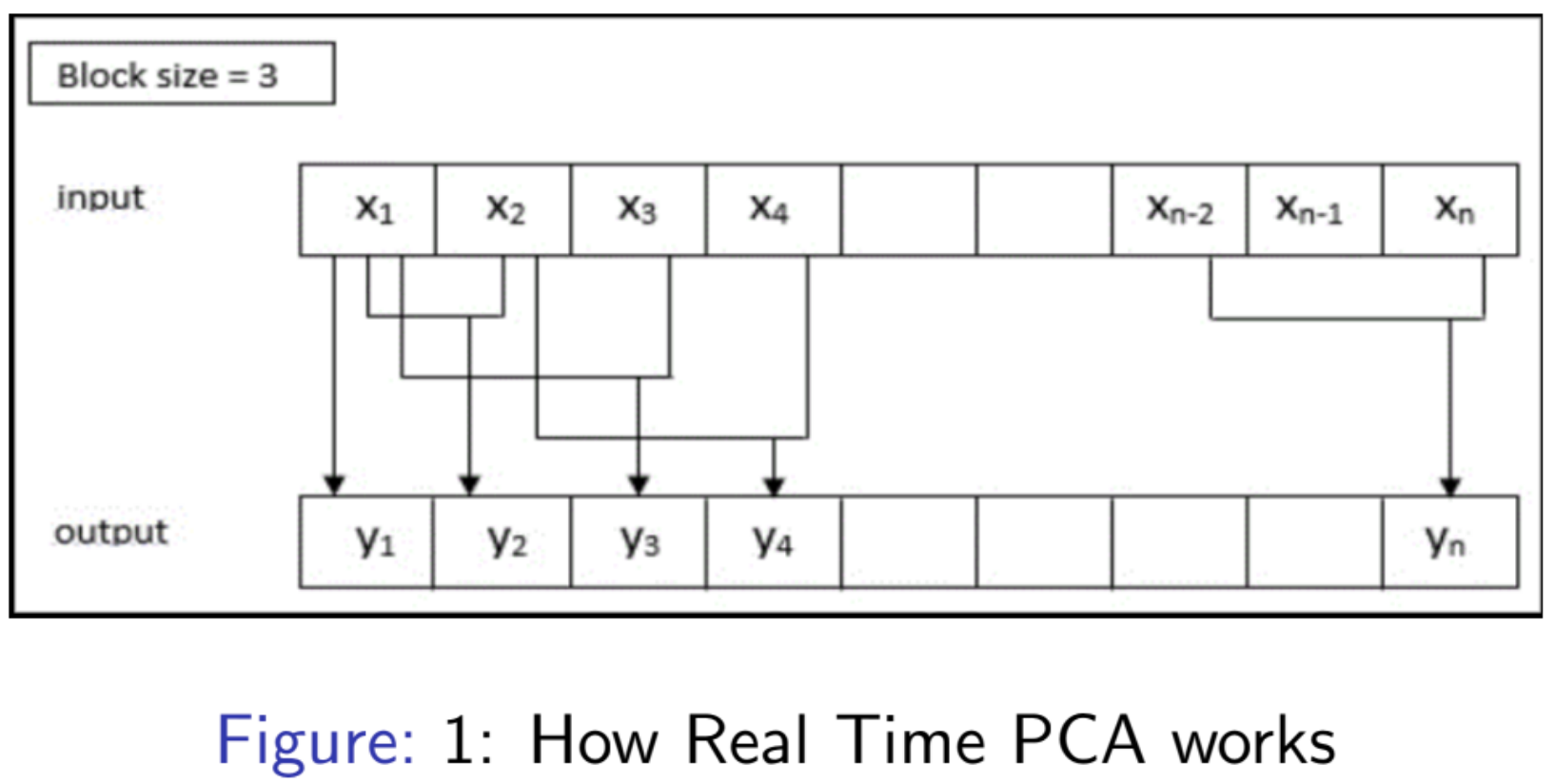

Research Assistant Jan 2019 - Aug 2019

Real-Time Principal Component Analysis

Innovated a data dimensionality reduction algorithm for streaming data, that uses a data-driven heuristic to learn a robust, noise-resilient eigenspace. Algorithm proven experimentally by spectral analysis and Bhattacharyya Distance.

Conference | Journal | Poster | Slide

Projects

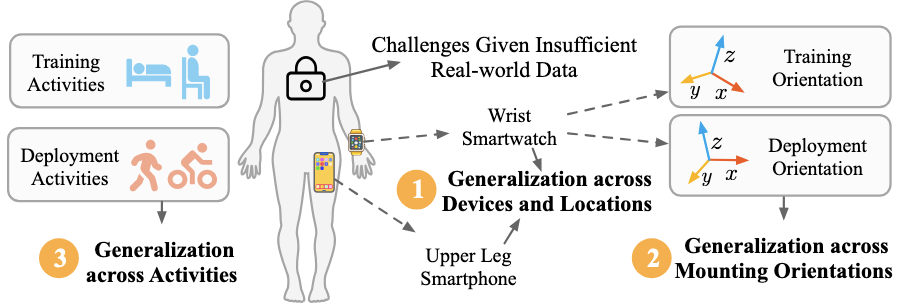

UniMTS: Unified Pre-training for Motion Time Series

First pre-trained model for human motion time series that excels in zero-shot action recognition across a broad spectrum of wearable devices, regardless of body placement or orientation.

Large Language Models for Time Series: A Survey

Pioneered the first survey on how LLMs can solve time-series tasks through prompting, time-series quantization, alignment of time-series with text, using vision as bridge, and using LLMs as output tools.

ZeroHAR: Contextual Knowledge Augments Zero-Shot Wearable Human Activity Recognition

Innovated a Zero-Shot Wearable Human Activity Recognition framework that integrates contextual knowledge about activities from LLMs and sensory environment information to improve Zero-Shot F1 Score by 20%.

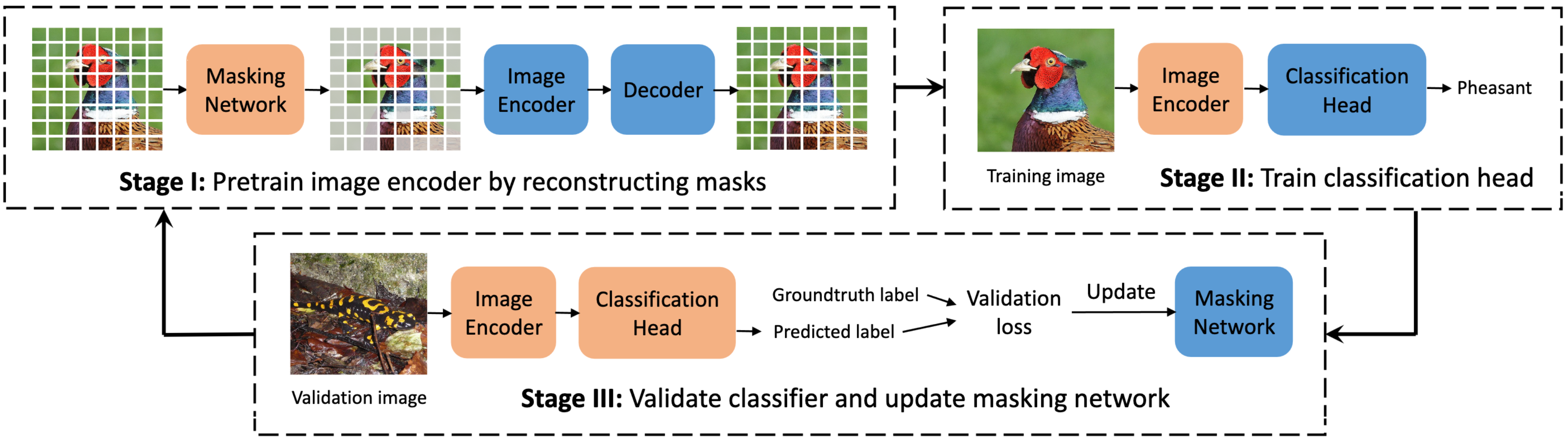

MLO-MAE: Downstream Task Guided Masking Learning in Masked Autoencoders Using Multi-Level Optimization

Developed a Multi-level Optimized Mask Autoencoder that improves image classification by integrating model pretraining with fine-tuning to allow fine-tuning performance guide an optimal masking strategy for pretraining.

PILOT: Physics-Informed Data Denoising for Real-Life Sensing Systems

Established a physics-informed data denoising scheme that integrates physics equations as constraints into training loss, ensuring the cleaned data complies with fundamental physical constraints, thereby improving data quality.

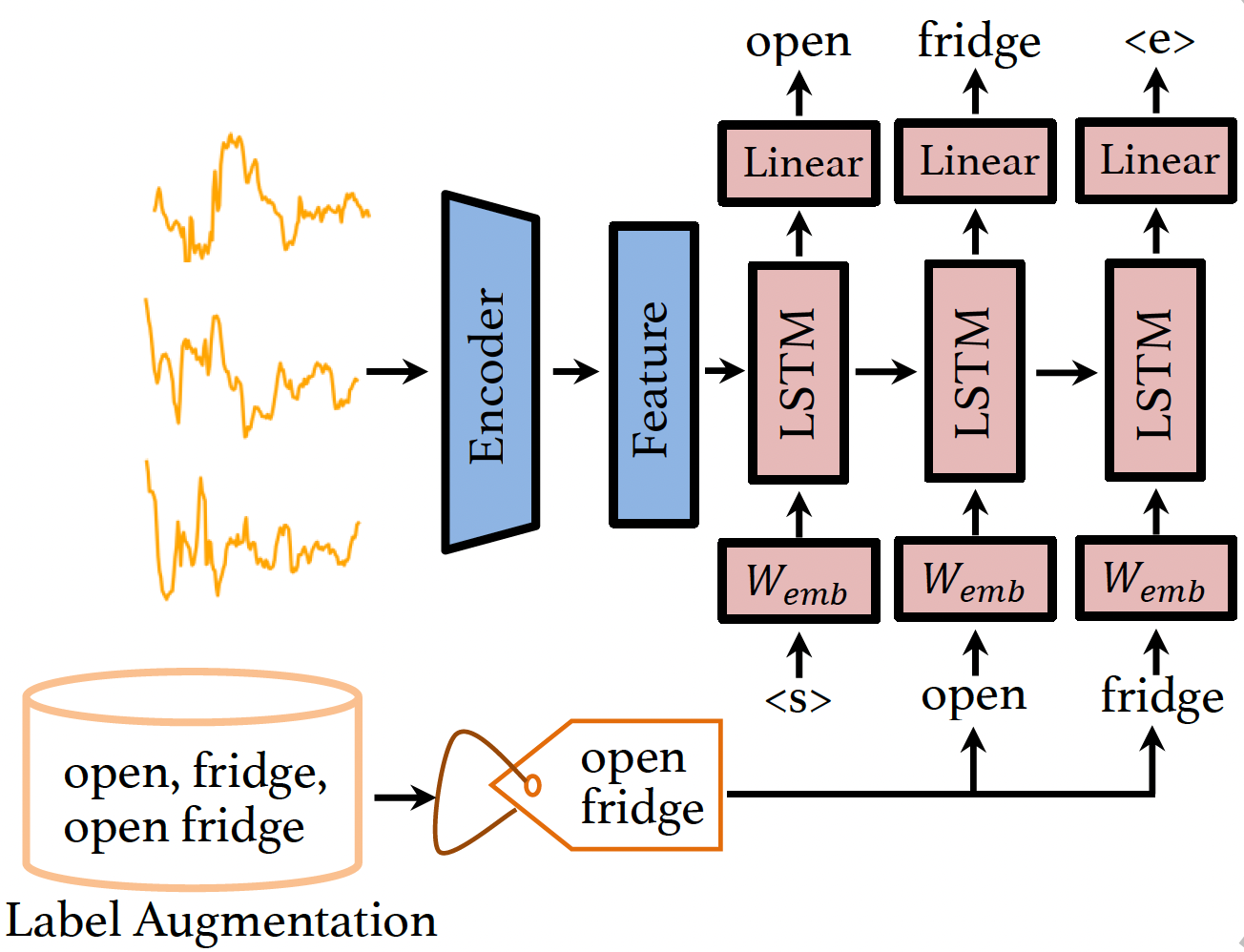

SHARE: Unleashing the Power of Shared Label Structures for Human Activity Recognition

Built an encoder-decoder architecture to model label name semantics that harnesses the shared sub-structure of label names to distill knowledge across classes, improving accuracy of human activity recognition tasks by 1.7%.

MusicLLM: Music Understanding with LLMs

Developed an LLM with music integration that generates text responses, including music genre, instruments used, mood, and theme, based on music files. Used Encodec audio features in conjunction with FLAN-T5 LLM.

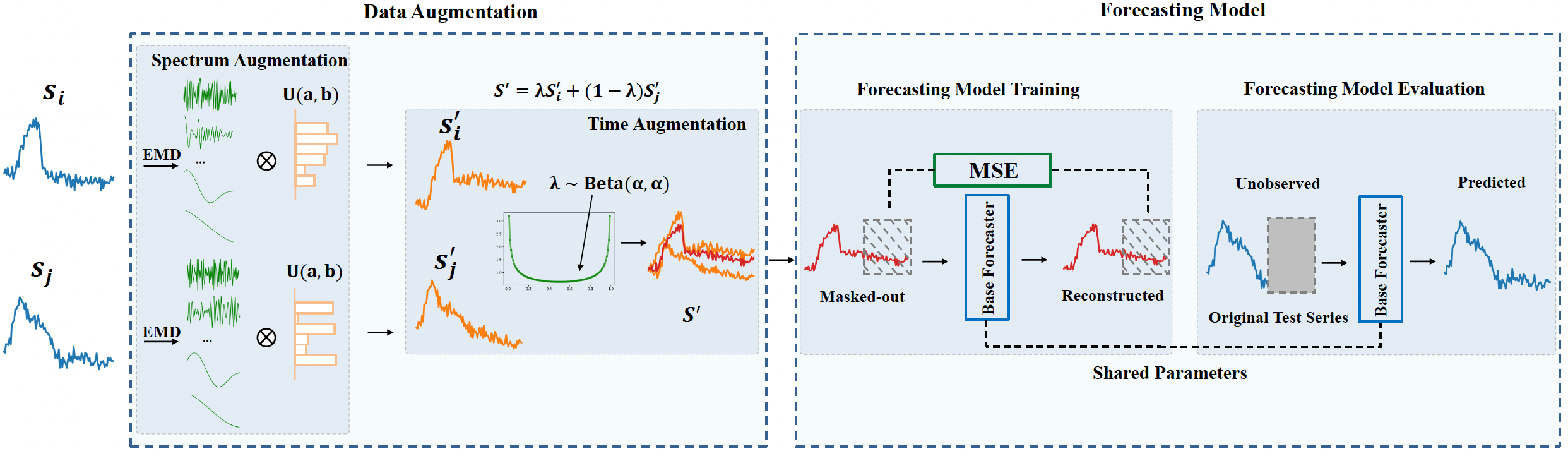

STAug: Towards Diverse and Coherent Augmentation for Time-Series Forecasting

Crafted a time-series data augmentation strategy that incorporates both time- and frequency-domain knowledge through mix-up and Empirical Mode Decomposition, respectively, improving time-series forecasting by 10.7%.

PrimeNet: Pre-Training for Irregular Multivariate Time Series

Crafted a self-supervised pretraining method for irregular, asynchronous time-series through sampling density-aware contrastive learning and time-sensitive data reconstruction techniques, improving few-shot downstream performance.

TARNet: Task-Aware Reconstruction for Time-Series Transformer

Developed a self-supervised task-aware data reconstruction technique that uses end-task knowledge to customize the learned representation to boost end task performance, improving time-series classification by 2.7%.

MARS: Multitask Pre-training for Accent Robust Speech Recognition

Built a pre-trained model for accent-robust speech representation that improves performance on several downstream tasks, like Speech Recognition by 20.4% and Speaker Verification by 6.3%, across 12 minority accents with few minutes of data.

ESC-GAN: Extending Spatial Coverage of Physical Sensors

Devised a globally-attentive multiscale Super-Resolution GAN that uses irregularly spaced sparse data to generate high-resolution spatiotemporal data for regions with no physical sensors, reducing reconstruction loss by 3.7%.

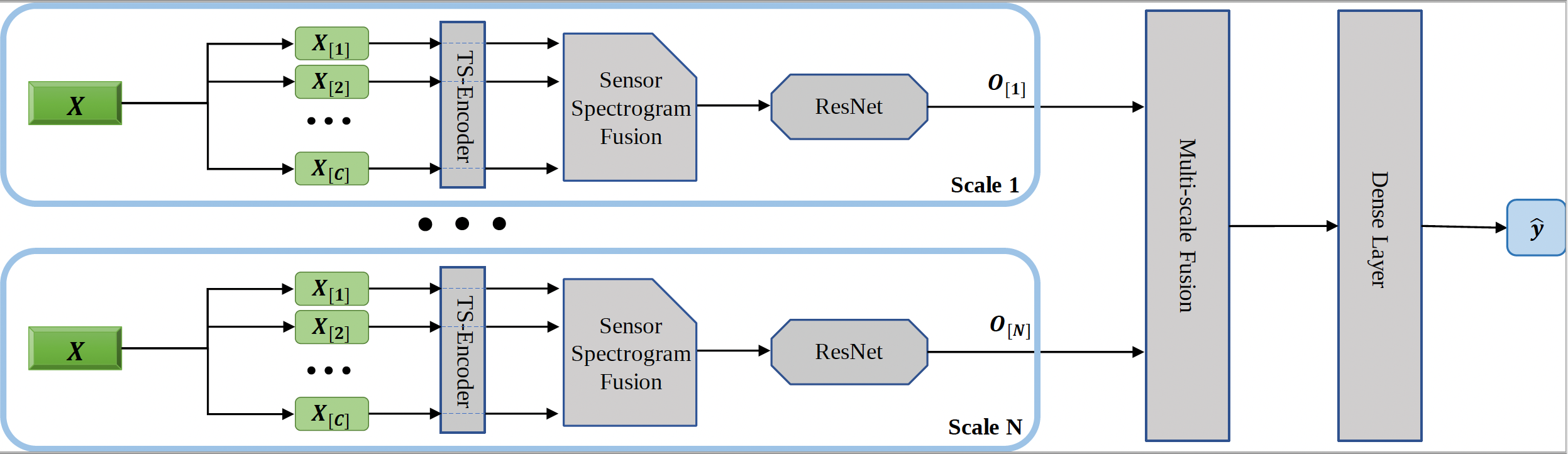

UniTS: Short-Time Fourier Inspired Neural Networks for Sensory Time Series Classification

Innovated a Fourier-transform (FT) inspired weight initialization and learnable Short-Time FT layer redesign to integrate time and frequency domain information, improving F1 score for sensory time-series classification by 2.3%.

Automated Ticket Resolution from Semi-Structured Log Data

Developed an ML pipeline to automate ticket resolution by conducting data cleaning, preprocessing, and visualization on time-series semi-structured system-level log corpus, followed by statistical feature extraction and classification.

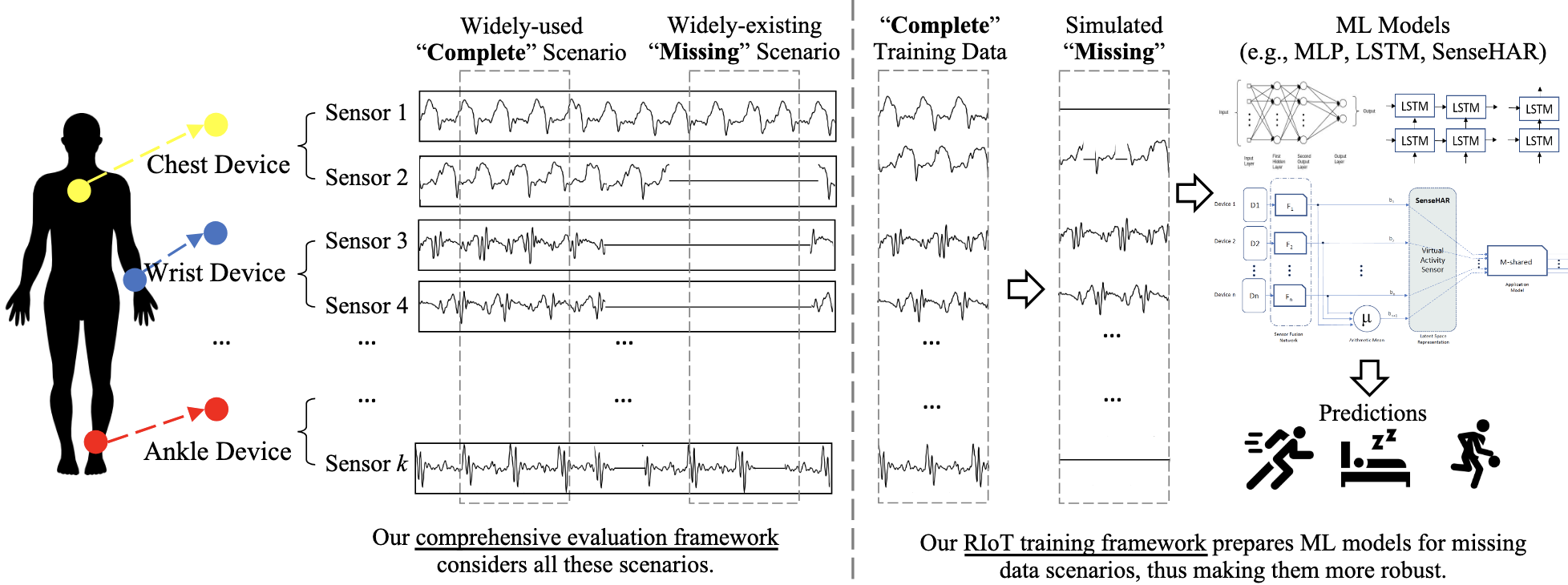

RIoT: Towards Robust Learning for Internet-of-Things

Built a comprehensive evaluation strategy and a model-agnostic training framework to facilitate robustness of machine learning models to missing feature sets in time-series data, achieving a 22% improvement in robustness.

Model Explainability for Redshift ML

Built a SHAP-based Explainability tool for AWS Redshift ML, enabling users to write SQL queries to introspect model predictions. Improved execution speed by 2x and reduced memory footprint by 90% over existing workflow in AWS Sagemaker.

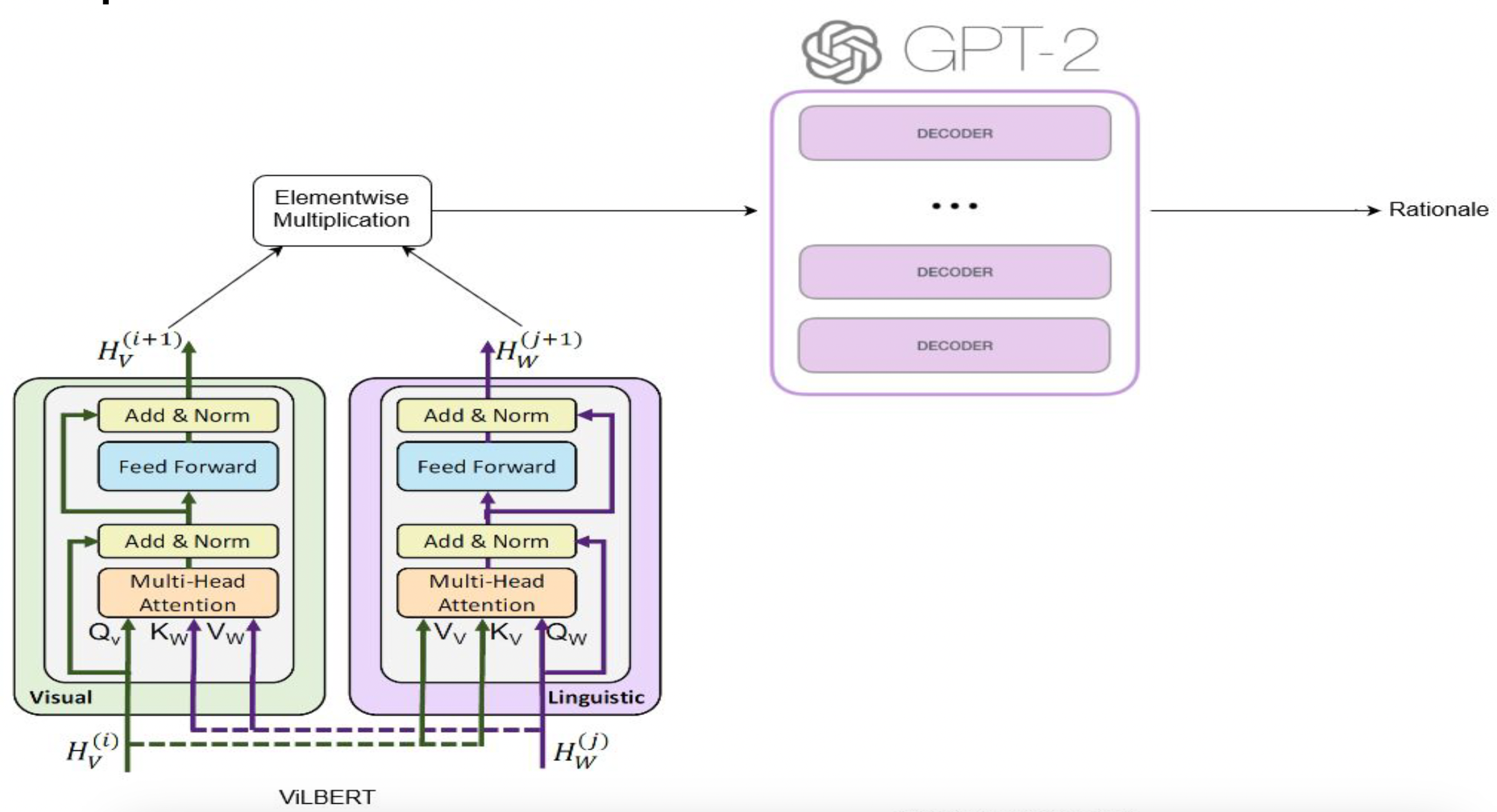

Generating Rationale in Visual Question Answering

Developed a model that generates a rationale behind selecting a multiple choice answer in visual question answering. Trained a joint visual-linguistic model to build multimodal image- and text-representation and a GPT-2 model to generate text.

Real-Time Principal Component Analysis

Innovated a novel algorithm for dimensionality reduction on streaming data that learns a robust, noise-resilient eigenspace representation using a simple, fast heuristic, achieving Bhattacharyya Distance of over 0.90.

Extracting Real-Time Insights from Stock Market and Twitter

Built and deployed a web application, based on Real Time PCA, to generate real-time analytics on streaming data fetched from Twitter, Google Search Trends, Bangladeshi Online News Portals and Dhaka Stock Exchange.

Stock Price Forecasting from Historical Prices and News Trends

Devised a multi-modal model to forecast stock prices based on historical prices and latest news trends. Used an LSTM to model the stock prices, Word2Vec to represent news headlines and an MLP to concatenate the features.

Publications

ZeroHAR: Contextual Knowledge Augments Zero-Shot Wearable Human Activity Recognition

Ranak Roy Chowdhury, Ritvik Kapila, Ameya Panse, Xiyuan Zhang, Diyan Teng, Rashmi Kulkarni, Dezhi Hong, Rajesh Gupta, Jingbo Shang

AAAI Conference on Artificial Intelligence (AAAI), 2025.

Paper | Poster | Slide

Downstream Task Guided Masking Learning in Masked Autoencoders Using Multi-Level Optimization

Han Guo, Ramtin Hosseini, Ruiyi Zhang, Sai Ashish Somayajula, Ranak Roy Chowdhury, Rajesh Gupta, Pengtao Xie

Transactions on Machine Learning Research (TMLR).

Paper | Code

UniMTS: Unified Pre-training for Motion Time Series

Xiyuan Zhang, Diyan Teng, Ranak Roy Chowdhury, Shuheng Li, Dezhi Hong, Rajesh Gupta, Jingbo Shang

Conference on Neural Information Processing Systems (NeurIPS), 2024.

Paper | Code

Large Language Models for Time Series: A Survey

Xiyuan Zhang, Ranak Roy Chowdhury, Rajesh Gupta, Jingbo Shang

International Joint Conference on Artificial Intelligence (IJCAI), 2024, Survey Track.

Paper | Code

MusicLLM: Music Understanding with LLMs

Ranak Roy Chowdhury, Rohit Paturi, Sundararajan Srinivasan

[Under Preparation]

PILOT: Physics-Informed Data Denoising for Real-Life Sensing Systems

Xiyuan Zhang, Xiaohan Fu, Diyan Teng, Chengyu Dong, Keerthivasan Vijayakumar, Jiayun Zhang, Ranak Roy Chowdhury, Junsheng Han, Dezhi Hong, Rashmi Kulkarni, Jingbo Shang, Rajesh Gupta

Conference on Embedded Networked Sensor Systems (SenSys), 2023.

Paper | Slide

PrimeNet: Pre-training for Irregular Multivariate Time-Series

Ranak Roy Chowdhury, Jiacheng Li, Xiyuan Zhang, Dezhi Hong, Rajesh Gupta, Jingbo Shang

AAAI Conference on Artificial Intelligence (AAAI), 2023. [Travel Grant]

Paper | Code | Poster | Slide

SHARE: Unleashing the Power of Shared Label Structures for Human Activity Recognition

Xiyuan Zhang, Ranak Roy Chowdhury, Dezhi Hong, Rajesh K. Gupta, Jingbo Shang

Conference on Information and Knowledge Management (CIKM), 2023.

Paper | Code | Slide | Package

MARS: Self-supervised Multi Task Pre-training for Accent Robust Speech Representation.

Ranak Roy Chowdhury, Anshu Bhatia, Haoqi Li, Srikanth Ronanki, Sundararajan Srinivasan

[Under Submission]

STAug: Towards Diverse and Coherent Augmentation for Time-Series Forecasting

Xiyuan Zhang, Ranak Roy Chowdhury, Jingbo Shang, Rajesh Gupta, Dezhi Hong

International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2023.

Paper | Code | Poster | Slide

TARNet: Task-Aware Reconstruction for Time-Series Transformer

Ranak Roy Chowdhury, Xiyuan Zhang, Jingbo Shang, Rajesh K. Gupta, Dezhi Hong

SIGKDD Conference On Knowledge Discovery and Data Mining (SIGKDD), 2022. [Travel Grant]

Paper | Code | Poster | Slide

ESC-GAN: Extending Spatial Coverage of Physical Sensors

Xiyuan Zhang, Ranak Roy Chowdhury, Jingbo Shang, Rajesh K. Gupta, Dezhi Hong

International Conference on Web Search and Data Mining (WSDM), 2022.

Paper | Code | Poster | Slide

UniTS: Short-Time Fourier Inspired Neural Networks for Sensory Time Series Classification

Shuheng Li, Ranak Roy Chowdhury, Jingbo Shang, Rajesh K. Gupta, Dezhi Hong

Conference on Embedded Networked Sensor Systems (SenSys), 2021.

Paper | Code | Slide

RIoT: Towards Robust Learning for Internet-of-Things

Ranak Roy Chowdhury, Dezhi Hong, Rajesh K. Gupta, Jingbo Shang

[Under Submission]

Paper

Real-Time Principal Component Analysis

Ranak Roy Chowdhury, Muhammad Abdullah Adnan, Rajesh K. Gupta

Transactions on Data Science (TDS), Volume 1, Issue 2.

Paper | Poster | Slide

Real-Time Principal Component Analysis

Ranak Roy Chowdhury, Muhammad Abdullah Adnan, Rajesh K. Gupta

International Conference on Data Engineering (ICDE), 2019.

Paper | Poster | Slide

Education

University of California San Diego

Ph.D. in Computer Science Sep 2019 - Aug 2024- Specialization: Data Mining

- Advisors: Professor Jingbo Shang, Professor Rajesh K. Gupta

- Thesis Proposal: Data-Efficient Learning for Sensory Time Series [Slide]

- Thesis Dissertation: Efficient Learning for Sensor Time Series in Label Scarce Applications [Dissertation | Slide]

University of California San Diego

M.S. in Computer Science Sep 2019 - Mar 2022- Specialization: Artificial Intelligence and Machine Learning

- Advisors: Professor Jingbo Shang, Professor Rajesh K. Gupta

- Thesis: Learning Across Irregular and Asynchronous Time Series [Dissertation | Slide]

Bangladesh University of Engineering and Technology

B.Sc. in Computer Science and Engineering Jul 2014 - Oct 2018- Major: Artificial Intelligence and Machine Learning

- Advisor: Muhammad Abdullah Adnan

- Thesis: Real Time Principal Component Analysis [Dissertation | Paper | Poster | Slide]

Honors & Awards

- Lead organizer of the inaugural workshop on “AI for Supply Chain: Today and Future” at SIGKDD 2025, Toronto, ON, Canada. [Workshop | Proposal]

- Invited Keynote Speaker at SIGKDD 2023 Workshop on Machine Learning in Finance, Long Beach, CA, USA. [Workshop | Slide]

- Qualcomm Innovation Fellowship 2022 for our proposal on "Robust Machine Learning for Mobile Sensing". One of the 19 winners among 132 participants across North America. [News | Proposal]

- Recipient of Travel Award: AAAI 2023, SIGKDD 2022

- Halıcıoglu Data Science Institute Graduate Fellowship 2019 to fund investigations in data science, in recognition of my research accomplishments. One of the 10 winners among 3906 applicants. [News]

- Selected to participate at The Heidelberg Laureate Forum 2020. [Certificate]

- Selected to participate at The Cornell, Maryland, Max Planck Pre-doctoral Research School 2019, Saarbrucken, Germany. [Certificate]

- Research Fellowship awarded by Department of CSE, UCSD to support graduate studies.

- Dean's List and University Merit Scholarship awarded by Department of CSE, BUET, in recognition of excellent undergraduate academic performance.

Services

- Lead organizer: SIGKDD 2025 Workshop on "AI for Supply Chain: Today and Future", Toronto, ON, Canada

- Program Committee Member: SIGKDD 2024

- Invited Keynote Speaker: SIGKDD 2023 Workshop on "Machine Learning in Finance", Long Beach, CA, USA

- Teaching: TA for CSE 158 - Web Mining and Recommender Systems

- Mentored 2 UCSD CSE MS students on a research project that led to a paper in the AAAI 2025 conference proceedings, Philadelphia, Pennsylvania, USA